Right at the start of 2024 I was writing a lot about switching to Qubes, but then came my first bout of COVID, the Scraptop died, my desktop died, I received Pinky & Brain, the R430 we got proved bad (but OK because lots of working ram), caught COVID again, the #2 rack mount server started flaking out, and now my backup desktop shows the same signs the other one did right before its demise.

That’s a LOT to digest in twelve months. But I’ve gone the last two weeks running macOS and the rough edges are mostly smoothed.

Attention Conservation Notice:

This is just me talking through overall technology direction. I am more than half writing this because it beats doomscrolling in the grips of election anxiety …

Changing Course:

Where I once thought that there would be lots of front line people needing to protect themselves, I think what I’ve heard this year is …

This stuff is HARD.

This stuff ain’t cheap.

How can I be sure I won’t slip?

The people who are going to do things on their own already are, and they’ve picked up various tips along the way. What I think 2025 will bring is …

Supporting groups more than individuals.

BYOD but secure things with Hexnode.

Employ Hunchly’s Cloak as a hardened remote desktop.

There is much more to be had analyzing rather than sneaking about collecting.

If I’m not doing Qubes, the need for Dell/HP gear in my personal space declines precipitously. The only Linux that needs doing is replacing the dying Dell R420 with another R430, or maybe future proofing with room for a GPU by going with an R530.

An Apple A Day:

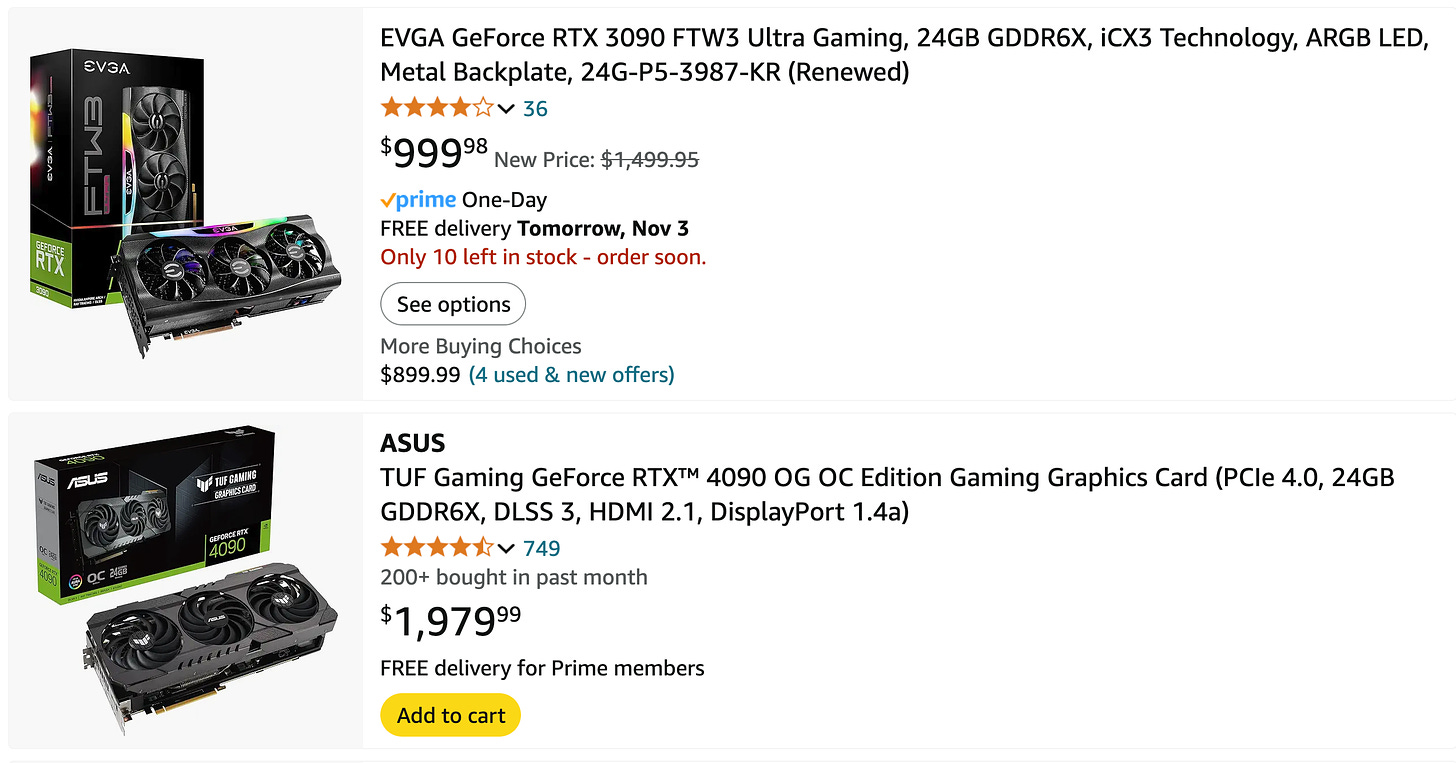

I am making the switch back to macOS after about six years and all I needed for it to be tolerable was Rectangle Pro, Shottr and Parallels. I am surprised a 16GB M1 processor machine behaves about as well as a 128GB Xeon in terms of Maltego, but it all works. I keep looking at the output of nvidia-smi on my desktop, seeing that dinky 6GB GTX 1060, and pondering a new GPU. If I want 24GB for LLMs it’s either $1k or $2k.

Or I can retire the forty pound HP Z420 under my desk and replace it with a two inch tall 5” x 5” Mac Mini. $1,000 will cover a 32GB M4 machine and $1,800 will bring a 48GB M4 Pro system to my door. Apple’s systems have “unified memory”, meaning the CPU and GPU share the system ram. Since I’ll be tinkering, not running production, that slightly less snappy performance is an acceptable tradeoff for gaining much more room for large language models. The M4 has four performance cores and 120GB/sec memory access, the M4 Pro has eight fast cores and 276GB of memory access.

And this isn’t really a need thus far, I just installed bpyptop and I have yet to bang my head on the 16GB memory ceiling of the MacBook Pro. But I may get a long awaited cash infusion specifically to do hardware stuff, so I’ve been window shopping.

I’ve owned at least one Mac from every generation over the last thirty six years - Motorola 680x0, PowerPC, Intel, and now ARM based systems. I am woefully behind in understanding “Apple silicon”. The last six year my setup has been entirely 2012 vintage Xeon E5 v2 machines with DDR3 memory. There are a lot of esoteric concerns with intel server processors, like the fact that the ones I have are the last generation before they added the AVX2 Advanced Vector Extensions.

Back before the turn of the century one had to pay very close attention to the details of chip architecture, bus slot timing, and so forth. Using retired enterprise grade Xeon systems freed me of that, but now with AI concerns I’m again taking notes on CUDA core counts and examining the minutia of system memory performance.

Under Construction:

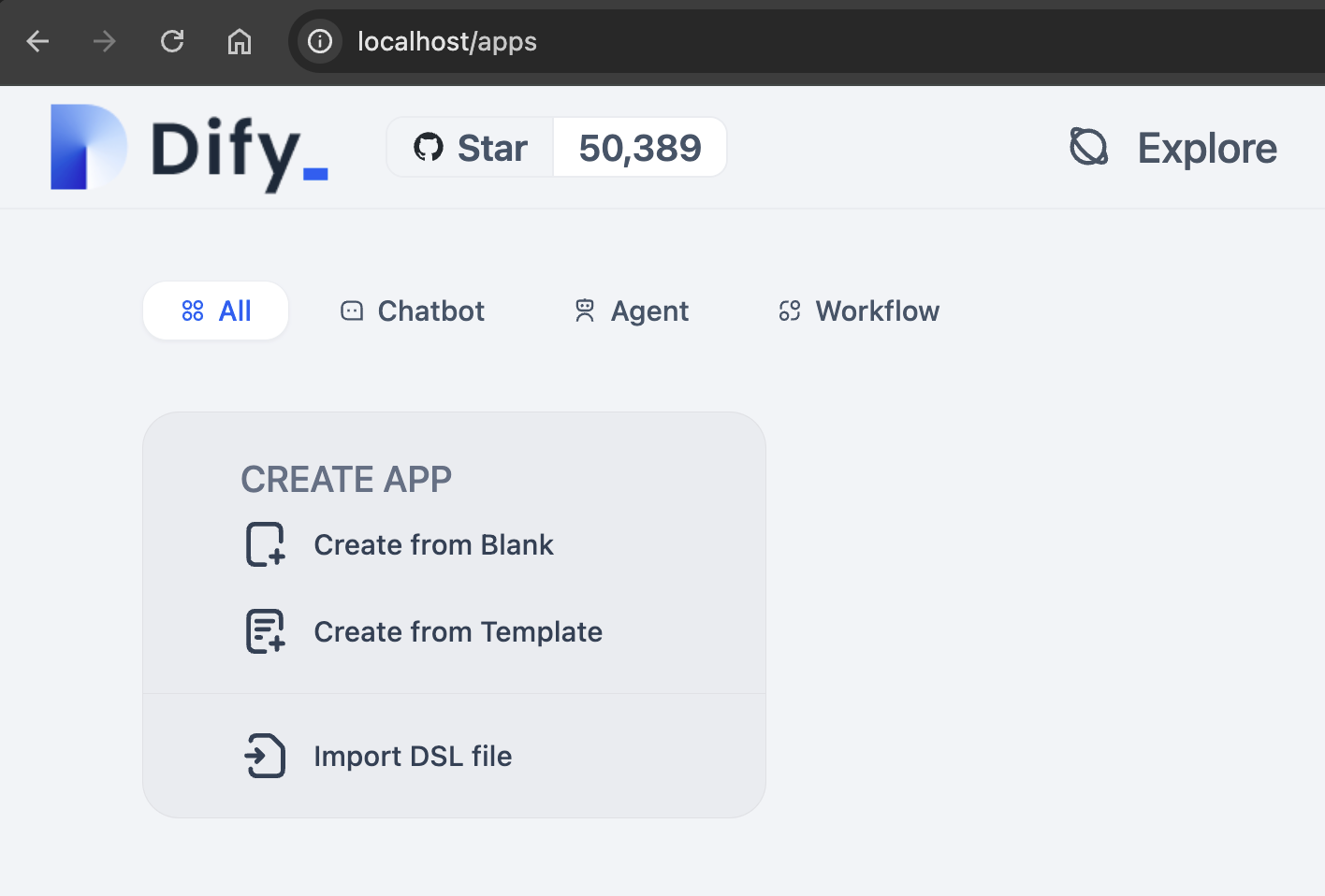

This weekend I pushed the right sequence of buttons to make this happen - the Does It For You AI construction kit running on my ARM based Mac. Making Docker behave takes a bit, because macOS doesn’t expect you to use the command line. Once that was resolved, it was fairly smooth sailing.

And Dify offers an extraordinary set of tools right out of the box - I count forty three templates …

And among them there are eleven workflows …

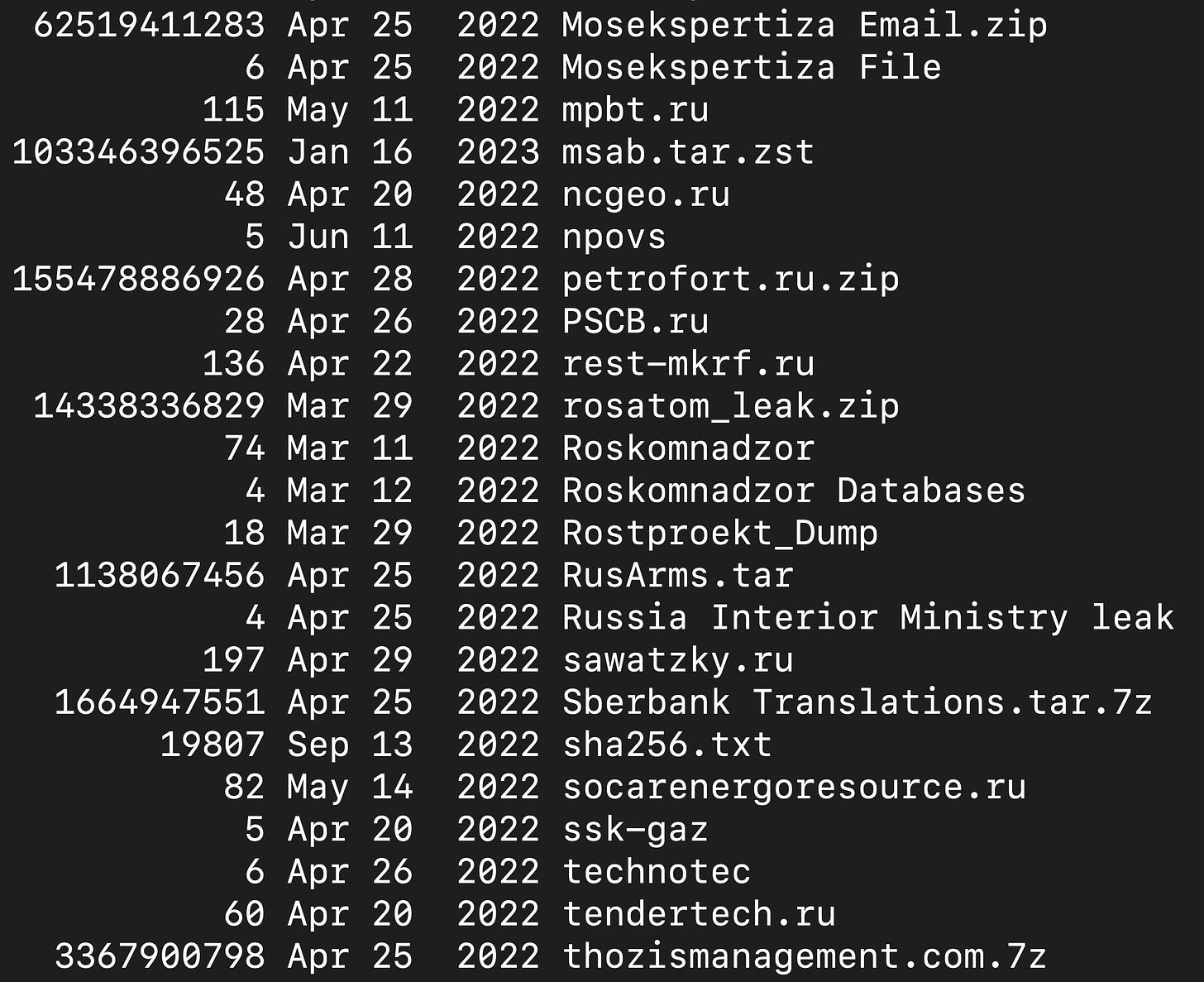

I see translation pipelines, there at the upper right. That matters because after the Malign Influence Operations Safari successes from just the PressTV data, I’ve been thinking a lot about the mirror I have of all the Russian leaks from DDoSecrets. This is only about a quarter of it.

Wetware:

The lack of capable hardware has been an issue; I tripped over having an ancient GPU, then stumbled hard having a Xeon that was just short of running LMStudio. But the real issue has been my cognitive bandwidth. My next big birthday is sixty and it’s not too far in the future. I have begun to grudgingly accept the constraints that one faces with age, and I’m not happy about it.

But I did just get most of my brain back sixteen months ago, after more than a decade of post-Lyme dysfunction, which has been truly marvelous. Things I would not have attempted when those torrents were collected in 2022 now seem like they might be in reach. Dify isn’t a production pipeline for a high volume fixed function enterprise system, but for just tinkering it’s perfect.

Looking slightly further ahead, here’s the real cognitive cliff I have to scale:

Basically, AI’s Retrieval Augmented Generation depends on having dense, orderly documents to assist in responses. If you can include a knowledge graph instead of counting on token proximity in documents, then you’ve really got something.

Conclusion:

I groused a lot about Linux when I had to retire my intel MacBook Pro back in 2019. Once I started using Ubuntu Budgie that cured most of the trouble. Coming back to macOS, all I needed were a couple of simple tools (Rectangle, Shottr) to smooth out most of the rough spots.

Using Hunchly’s remote desktop Cloak has been a surprising bright spot - macOS hot keys have no effect in that environment, and the Linux hot keys are ignored by macOS. I’m able to use both desktop and remote in a fairly fluid fashion without the periodic insult of intending to close a Cloak window and instead closing the entire Cloak session.

When I talk to my peers, I’m just a step or two behind those who are spending a lot of time actually running LLMs against their data … but then I see articles like this, and I know what Neanderthals felt, watching skinny, fast moving newcomers entering their valley …